1 Introduction

The path through various health outcomes such as serious diseases, disability and death develop sequentially over one’s life span. Many factors such as genetic and epigenetic factors, and health related behaviors throughout one’s life condition those health outcomes. Some individuals develop one or more diseases and their health deteriorate faster, worsening their quality of lives and survival probabilities, while others have slower aging process. The biology of aging process determines how those factors affect aging at the cellular level. In this paper, with insights from the biology literature, I use statistical and neural network models for predicting and estimating the transition probabilities between health states and the times they spend in a health state before transiting to another health state for individuals in their mid ages. While the framework I develop in the paper is applicable to many other heath outcomes such as incidence of cardiovascular and immune diseases, I focus on one health outcome of interest—any of the disabilities that qualifies for a public disability insurance program—with death as a competing risk health outcome. Death is a competing risk heath outcome in the sense that once dead, one cannot be at risk of disability, or as a matter of fact, any other health outcome of interest.

The main issues I address in this paper are: What kind of aging process and what conditioning factors for it the biology literature recommends? Which type of model—a multistate statistical model, a feedforward multilayer perceptron (MLP) type of neural network model, or a long short memory (LSTM) recurrent neural network (RNN) model—that is better suited for prediction and estimation of transition probabilities among health states, taking into account the effect of various covariates (the conditioning factors)? Which model can estimate the effect of various conditioning factors and their relative importance on the type of health trajectory one follows?

I will not get into the details of the biomedical literature on these issues. Here I will point out the main insights from my reviews elsewhere, (Raut 2019a, 2019b). Similar to the literature of behavioral genetics of personality and intelligence, the nature-nurture controversy exists in the health literature: Is it all nature (i.e., all genetics or genome) or is it all nurture (i.e., all epigenetics or epigenome modulated by the environment and health related individual behaviors) that determines the progression of health over the life span of an individual? The consensus so far is that it is neither the nature nor the nurture, it is a combination of the two that determines health developments over one’s life-span. The research so far found that certain genetic make-ups (i.e., certain sequences of DNA) predispose one to certain diseases, ((Barondes 1999; Khoury et al. 2009; Bookman et al. 2011)), but the epigenetic inputs—especially at the very early stage of life, i.e. in the womb, but not the least at later stages of life—are also very important determinants of life expectancy and quality of life. The biomedical research so far has not found genes that are responsible for aging and age related diseases, leading to early disability and mortality. The twenty-first century biomedical research emphasizes more on the epigenetic factors than the genetic factors to explain the pattern of health developments over the life-span.

At the cellular level, aging means cellular senescence—i.e., after a certain number of cell divisions, it stops dividing or have defective replications, causing tissues or organs to increasingly deteriorate over time. Senescence leads to incidence of degenerative diseases. It is generally observed that women live longer than men and those with better life styles in terms of smoking, exercising and diets delay the aging process (for evidence, see (Blair et al. 1989; Vaupel 2010; Austad and Fischer 2016; Zarulli et al. 2018)). This line of biological inquiry led to explore the (cellular) molecular mechanism of aging process and to find biomarkers of aging that can be used to diagnose, monitor, and improve the age related physiological decline and disease. A good indicator of the aging process at the cellular level is the rate of decay in the telomere length. Telomeres are the caps at the end of chromosomes in a DNA sequence. They look like the plastic caps at the end of shoelaces. The main function of telomeres is to protect cells preserving the genetic content within each chromosome during cell divisions. Unfortunately, the telomere length shortens in the course of each cycle of chromosomal replication during cell division, reaching the Hayflick limit (about 40 to 60 cell divisions, Hayflick (1965)) with a critically short telomere length, after which the cells stop dividing or divide with chromosomal abnormalities. The rate of shortening of the telomere length is modulated by telomerase enzyme. Why the rate of decay in telomere length varies for individuals is an active area of biomedical research and the mechanism for it is not yet fully understood. Many studies find that higher stress of any kind— psychological, financial, social and chemical—is strongly associated with higher oxidative stress, lower level of telomerase enzyme, and shorter telomere length. Furthermore, shorter telomere length is associated with health related phenotypes of poorer health and higher risks for cardiovascular and immune diseases (see, (Epel et al. 2004; Shalev et al. 2013; DiLoreto and Murphy 2015; Shalev and Belsky 2016; Simons et al. 2016)).

More recently emerged second line of biomedical research on aging and aging related diseases explores the epigenetic (which literally means on top of genetic) mechanism for these life-cycle processes. (See for instance, (Alisch et al. 2012; Barres and Zierath 2011; Boks et al. 2009; Esteller 2008; Hannum et al. 2013; Horvath 2013)).

The above literature emphasizes that aging and age related diseases are associated with shortening of telomere length and changes in global methylation, and that stress, smoking, drinking, chemical misuse, physical exercising, and diet are important modulators for these changes. The question remains, what are the critical periods or the developmental milestones in life-cycle that program the motions of health developments over the life-span of an individual?

Research along this line began with the striking findings of (Barker 1990, 1998) and later of Gluckman et al. (2008). They found strong associations between birth weight and many later life chronic diseases, including hypertension, coronary artery disease, type 2 diabetes, and osteoporosis. Many other studies find that much of health developments in later life is determined very early in life, during the prenatal period right after conception, i.e. in the womb. Sometimes it is said in social sciences that inequality begins in the womb. The effect of an environmental stress in the womb on later life diseases and developmental outcomes is known as programming. Gluckman et al. (2008) observes that ``like the long latency period between an environmental trigger and the onset of certain cancers, the etiology of many later life diseases such as cardiovascular disease, metabolic disease, or osteoporosis originate as early as in the intrauterine development and the influence of environments that created by the mother.’’ For more empirical evidence for the developmental origin of later life diseases, see (Barker 2007; Thornburg et al. 2010). The papres by (Kanherkar, Bhatia-Dey, and Csoka 2014; Barbara, Abdilla, and Calleja-Agius 2017) provide detailed descriptions of the biological process of development of life and health, starting from conception. They explain how the global DNA demethylation of the fertilized egg right after conception creates an epigenetic “clean slate” to start a new life, followed by rapid remythylation to reprogram the maternal and paternal genomes to create epigenetic configurations in the fetus which rapidly produce specialized cells of the body with cell divisions. The environment provided in mother’s womb during those times has long-term effects on the child’s later cognitive and other health developments. While inputs at early milestone ages are important for later age health, healthy living and good healthcare are still important for maintaining health in mid ages.

Studies in social sciences find that low socio-economic status (SES) are associated with inflammation, metabolic dysregulation, and various chronic and age-related diseases such as type 2 diabetes, coronary heart disease, stroke, and dementia, and that low SES create epigenetic changes in individuals that lead to faster biological aging even after controlling for health-related behaviors such as diet, exercise, smoking, alcohol consumption, or having health insurance, see for evidence, Simons et al. (2016). The study by Karakus and Patton (2011) uses the Health and Retirement Study data and after controlling for education, race, income, health risk indicators like BMI and smoking, functional limitations like gross motor index, health limitations for work, and income, they find depression at baseline leads to significantly higher risk for developing diabetes, heart problems, and arthritis and no significant effect on developing cancer during the 12 years follow-up period. Renna (2008) uses National Longitudinal Survey of Youth data to find no significant effect of alchohol use on labor market outcomes such as on earnings or hours of work. Seib et al. (2014) collected data on a sample of older women in Australia and found that severe traumatic life events create strong stress levels that influence them to have unhealthy living and diet measured by BMI and develop stronger and earlier health problems. Conti, Berndt, and Frank (2009) utilize the CES-D data in the Health and Retirement Study dataset to construct a measure of depression, and find that depression of men and women have significant negative effect on employment status, early retirement, and application for DI/SSI benefits.

Using insights from the above literature, I formulate a finite state continuous time stochastic process model of disablement process. I postulate that as individuals age, the homeostatic regulatory mechanism that controls physiological systems—respiratory, cardiovascular, neuroendocrine, immune, and metabolic—becomes more and more fragile in its ability to face internal and external stressors, leading to early occurrence of disease, disability and death. I use available bio-markers (such as BMI, CES-D, cognition) and health related behaviors such as smoking, and vigorous exercising along the life-course to explain how they affect the risk of chronic diseases, disabilities and death.

A multistate stochastic process framework is useful to study the effects of various covariates—the covariates that may be different for different intermediate health states—on the risks of disability and death.

The statistical models for estimation of transition probabilities are based on Markov processes and assume that transition intensities of these processes have Cox proportional hazard specifications, see for instance ((Aalen and Johansen 1978; Andersen et al. 1993; Andersen and Perme 2008; Crowther and Lambert 2017; Fleming 1978)). Markov models have short memories and proportionality assumption imposes serious limitations on the structure of the model, which could be far from the functional form of the true data generating process. Neural network models relax these limitations.

It is known that a feedforward multilayer perceptron (MLP), also known as a feedforward deep learning model, with a sufficient number of neurons in the hidden layer can approximate any function as closely as desired. That is, an MLP is one of the best `universal function approximator’ (Hornik, Stinchcombe, and White (1989)). A few papers—(Faraggi and Simon 1995; Biganzoli et al. 1998; Katzman et al. 2018; Lee et al. 2018; Ranganath et al. 2016)– used feedforward MLP networks to compute the survival probabilities when there is only one possible transition between two health states—alive and death– with the exception of Lee et al. (2018) who studied competing risks, by breaking death into various causes of death. Katzman et al. (2018) introduced more general non-linearity of of the covariate effects, but still kept the Cox proportionality assumption. Ranganath et al. (2016) assumed parametric form for the baseline hazard function as compared to the non-parametric form in Cox model, but they made the covariate effects nonlinear. Ren et al. (2019) consider a recurrent neural network, but the covariates are time-fixed at the initial time step. They also restricted to only one transition, i.e., a two-state model. None of these models deals with sequential framework where new information arise with time steps and update the previously estimated transition probability estimates. In these models, all the inputs from the past, present and the future times in the sample determine current probabilities. These models, with the exception of Ren et al. (2019) although with other serious limitations, have no ways to store information learned from the past inputs. After training these models, when new data arrive, these models cannot use this new data to update the predicted probabilities without losing information in the early periods.

A recurrent neural network (RNN) uses feedback connections or self connection of neurons in the hidden layer, and thus is capable of storing important information learned in the past in these recurrent neurons. Like an MLP is a universal function approximator, an RNN has the similar nice property that with sufficiently large number of hidden recurrent neurons, an RNN can approximate any sequence-to-sequence mapping ((Graves, Wayne, and Danihelka 2014; Hammer 2000; Siegelmann and Sontag 1992)). These models have shared weights between time-steps and in the input and output layers, as a result when new data arrive after training the model, it can use all the past important information learned from the past to this new data point and predict the future probabilities in the light of this new data. Since training such models involve computation of gradients using backpropagation through time, it involves multiplication of numbers less than one many times, leading to vanishing gradient problem. In these scenarios, it cannot keep useful information in memory from the long time back. Overcoming these problems led to a few modifications of the RNN framework. The most successful of them is the long short memory (LSTM) RNN model introduced by Hochreiter and Schmidhuber (1997). For more on LSTM-RNN models, see Graves (2012). I use this LSTM-RNN model for prediction of time-to-event probabilies of health outcomes. Another problem is with the training data size. To obtain good predictive performance, these models require a large number of training examples. In drug discovery problems or with surveys or lab experiements, obtaining large number of examples is costly. To overcome small data problem, (Altae-Tran 2016; Altae-Tran et al. 2017) introduced further refinement of the LSTM-RNN framework. I do not adopt such modifications. In this paper, I use the original LSTM-RNN model specified in Graves (2012) and implemented in Keras module of Tensorflow 2.0 for Python package.

The rest of the paper is organized as follows. In Section 2, I describe the econometric specification of the maultistate stochastic process and describe estimation issues. In Section 3, I describe the Health and Retirement Study data set and the variables that I use in estimation in both frameworks. In Section 4, I describe the recurrent neural network with LSTM memory cells that I use to extend the multistate Cox model of health outcomes process. In Section 5, I describe the performance criteria that I use to compare the performance of the models used in this study. Section 6 concludes the paper.

2 Statistical multi-state model of health outcomes process

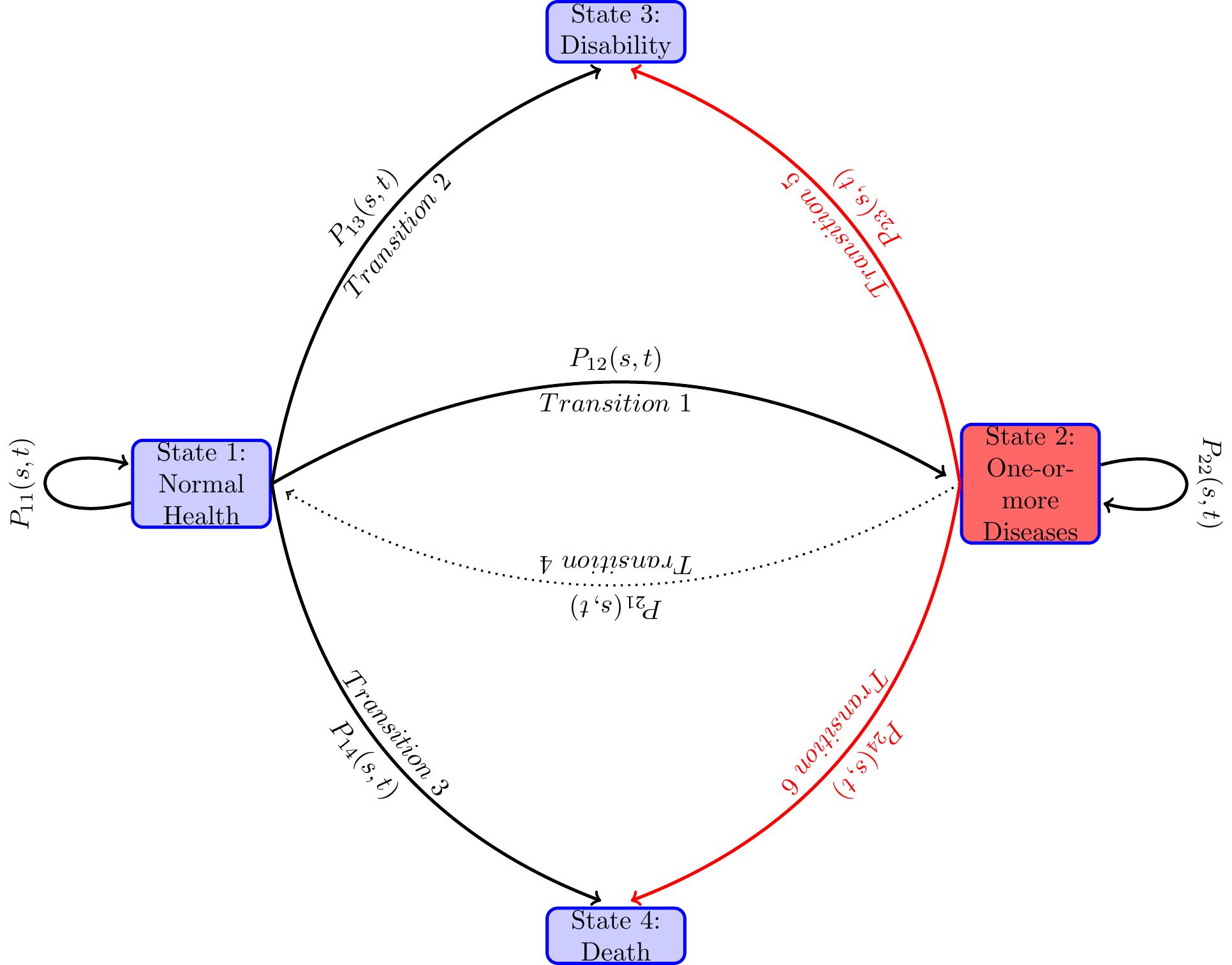

The goal is to formulate and then estimate an econometric model of paths to enter disability rolls. An individual can be on the disability rolls if the individual has a qualifying disability before reaching age 65 and has not died before applying for disability benefits. I assume that an individual’s getting on the disability rolls is a terminal event, i.e., the individual does not move to normal or diseased health states. After reaching this state, the individual is not followed any further. A competing risk for getting on the rolls is death before age 65. This is a competing risk because an individual cannot be at risk for disability enrollment if the individual is already dead and thus not at risk to get on the disability rolls. The individual is not followed after the event of death because our primary interest is the event of getting on to the disability roll. In the technical terms defined below, we treat the health states—disability and death—as absorbing states, i.e., once in that health state, an individual remains in that health state and removed from the sample for later considerations. An individual can be in normal health and then become disable or die before becoming disabled or may first become diseased with one or more diseases and again from that health state become disabled or die before becoming disabled. Various factors affect individual risks of various transitions of health states and the time they stay in each health state along the life-span. Both, in turn, determine the timing of getting on to the disability rolls.

I model the paths through various health states that individuals follow along their life-spans as a continuous-time finite-state Markov process , where at each time point during the study period , the random variable takes a value from a finite number of health states in . In the present study, we take , treating age as time period and age as time period . The unit of time is 2 years, as HRS collected data every two years. The state space contains states, “healthy or normal health”, “ill with one or more chronic disease”, “disabled with DI-or SSI-qualifying disability” and “Death”. Sometimes I will use in place of .

Typically in the study period, an individual along the path to disability or death before age 65 may be in the normal health state for a length of time, and then moves to another health state, say diseased health state, and remain there for some time, and then jump to the health state of disability or to death, or reach 65 and censored. There are many possible paths that an individual can follow. Even when the health states they pass through are the same, the duration of stay in each health state (also known as the waiting time in stochastic process literature) could vary. Each configuration of visited states and the waiting times in those states constitute one path. When time is continuous, an uncountably large number of paths are possible. From the diagram below one can see various paths that an individual may follow during the study period. The focus of the paper is to study the probabilities of various transitions and the duration of stay in each health state.

Let the transition probabilities of our Markov process be given by

for all , and , . Denote the matrix of transition probabilities by

An individual may be in any of the health states in at time , the probability of which, known as the occupation probability, depends on the occupation of the previous health states. and the transition probabilities among health states. Let be the occupation probability of an individual in health state at time . Occupation probability can be also viewed as the proportion of population of age who are in health state . Denote all the occupation probabilities as a column vector . Then the occupation probabilities move over time recursively as follows,

The goal is to estimate for each time time the transition probability matrices , taking into account the effects of (i.e., conditioning on) the past materialized values of the determining factors, that is covariates, and the stochastic process up to time .

It is known that the transition probabilities of a Markov process satisfies the Chapman-Kolmogorov equation

I will use the above to point out how the transition probabilities are parameterized and statistically estimated by the Aalen-Johnson non-parametric estimation method that plugs in the Nelson-Aalen estimates of the integrates hazards for each transition separately in an unified way for both continuous time and discrete time Markov processes.

I assume that the transition probabilities are absolutely continuous in and A transition intensity— also known as the hazard rate in the survival analysis literature when there is only one possible transition (alive to death), and as the cause-specific hazard rate in the competing risk analysis when death is split into various causes of death1 —of the health process from health state to health state at time is the derivative

For absorbing states , the transition intensities , for all . Denote the matrix of transition intensities by

Every Markov stochastic process has an associated intensity process. In the theory of stochastic processes, it is known that given an intensity function , there exists a continuous time Markov chain satisfying the Chapman-Kolmogorov Equation 2 and conversely, given a Markov chain with transition probabilities , that satisfies the Chapman-Kolmogorov Equation 2, one can derive the transition intensities of the form in Equation 4. For this reason, the intensity function is also referred as infinitesimal generator of the Markov process. While is an unconditional probability, the transition intensity or the hazard rate is the conditional (instantaneous) probability. More specifically, is the probability that an individual experiencing event in a very small interval of time given that he has been in state at time . This conditional probability may depend on time and other characteristics up to time .

In statistical and neural network models, the dependence of transition probabilities on individual characteristics is generally done through parametric or semi-parametric specification of the transition intensity functions . One then estimates the transition probabilities from the non-parametric or semi-parametric estimates of the integrated transition functions. An integrated transition intensity function for a transition is defined by . Just like for a continuous random variable, it is easier to estimate its cumulative density function nonparametrically than its density function, for the time-to-event data with censoring, it is easier to estimate the integrated hazard function than the intensity function. While for the discrete case this problem does not arise, estimation of transition probabilities via nonparametric estimates of the integrated hazard function is an unified approach encompassing both discrete time and continuous time data. I follow this strategy.

To explain and gain insights into this estimation strategy, denote the matrix of all the cumulative hazard functions as , and the matrix of the derivatives of the component functions by . Let the time interval is subdivided into a partition of sub-intervals with cut-off points Denote the partition by Denote the largest size of the sub-intervals by Applying repeatedly the Chapman-Kolmogorov equation on the sub-intervals of the partition, we have

Note that as the transition probability matrix .2 As , the right hand side of converges to a matrix, known as the the product integral3 of the integrated hazard functions , denoted as . Or in other words, the transition probabilities of a stochastic process parameterized via an intensity process is given by the product integral of integrated hazard function.

The above product-integral solution is a generalization of the Kaplan-Meier (Kaplan and Meier (1958)) product-limit formula for the survival function in survival analysis. The product integral formula unifies both discrete time and continuous time Markov processes, and is an extremely useful apparatus for statistical analysis of Markov processes.

The most widely used statistical procedure to estimate the transition probabilities , is to plug in an estimate in Equation 6. The effect of covariates is incorporated by conditioning the transition intensity functions on the covariates process . There are many ways to get these estimates. I will follow two approaches in this paper: First, I will explore the more widely used non-parametric Aalen-Johnson-Fleming method via Nelson Aalen estimates for each-component of the with Cox proportional hazard model to incorporate the time-varying covariate effects in the next sub-section. Second, the Neural network approach explored in a later section.

2.1 Aalen-Johansen Estimator of Transition Probabilities

Most widely used statistical procedure incorporates the time-varying covariates for the transition probabilities by specifying a semi-parametric functional forms for the intensity hazard functions

is known as the baseline hazard function. The specification of transition intensity in Equation 7 is known as the proportional hazard model. It aggregates the effects the regressors linearly as a measure of some kind of latent factor, and that latent factor shifts the baseline hazard proportionately, i.e., the effect on hazard is uniform over time. Two papers — Fleming (1978) and Aalen and Johansen (1978) — independently extended the Kaplan-Meier nonparametric product limit estimator from survival analysis to the multi-state time to event models. While Fleming (1978) gave the estimator for complete data, Aalen and Johansen (1978) gave the estimator for censored data. To describe the Aalen-Johansen estimator, let me introduce some concepts and notation. For each individual and corresponding to each transient health state, , define two types of stochastic processes: (1) the counting processes denoting the observed number of transitions from health state to health state that the individual has made by time —which in our case is either or , since by assumption when an individual exits a health state, the individual does not return to it in future ; and (2) , taking value if individual is at risk at time for transition to another possible health state, and taking value otherwise.

Let us focus on one transition . Denote by , a counting process measuring the number of transitions of the in the sample at time , , a counting process measuring the number of individuals in the sample at risk for a transition at time , and . In any empirical study the data will be at the discrete times, say in ordered times . At each time , we calculate

Without covariates, the Nelson-Aalen non-parametric estimate of the integrated intensity functions is given by, for each

The Aalen-Johansen estimator for the transition probabilities is obtained by substituting for each component the Nelson-Aalen estimates and then applying the product integral formula Equation 6 as follows

With covariates one obtains the Cox partial likelihood estimate for for each transition separately and then computes an weighted risk set defined by

The estimates of cumulative intensities with covariates are obtained from Equation 8 by replacing, with

Nelson-Aalen estimator has nice statistical property. For instance, using Martingale calculus, it can be shown that the estimator is asymptotically unbiased. Using the results from Martingale theory, one can derive the formula for variance-covariance estimates of parameter estimates and the normalized estimate is normally distributed (central limit theorem holds for normalized parameter estimates), see for details, (Aalen, Borgan, and Gjessing 2008; Andersen et al. 1993; Fleming and Harrington 2005).

The likelihood of the sample (for details, see (Andersen et al. 1993; Andersen and Perme 2008; Commenges 2002)),

I will use this likelihood function in construction of the log-likelihood loss function for the neural network models.

I use the R package, mstate, developed and described by the authors in Wreede, Fiocco, and Putter (2010) for the estimation of the parameters and their standard errors?

The Aalen-Johnson nonparametric estimates without any covariates of the transition probabilities, are shown in Table 2 and plotted in Figure 2.

For the Cox regression parameter estimates, I have used both the R package mstate (see, Wreede, Fiocco, and Putter (2010) for details) and also used the SAS procedure phreg (both produced the same estimates) and used the mstate package to estimate all the transition probabilities (SAS does not have readily available procedure for this purpose). The parameter estimates are shown in Table 3 and Table 4.

3 The data set and the variables

I use the Health and Retirement Study (HRS) dataset for empirical analysis. A lot has been reported on the family of HRS datasets—about its structure, purpose, and various modules collecting data on genetics, biomarkers, cognitive functioning, and more, see for instance (Juster and Suzman 1995; Sonnega et al. 2014; Fisher and Ryan 2017). The first survey was conducted in 1992 on a representative sample of individuals living in households i.e., in non-institutionalized, community dwelling, in the United States from the population of cohort born during 1931 to 1941 and their spouses of any age. ``The sample was drawn at the household financial unit level using a multistage, national area-clustered probability sample frame. An oversample of Blacks, Hispanics (primarily Mexican Americans), and Florida residents was drawn to increase the sample size of Blacks and Hispanics as well as those who reside in the state of Florida’’, Fisher and Ryan (2017). The number of respondents were 13,593. Since 1992, the survey were repeated every two years, each is referred to as a wave of survey. New cohorts were added in 1993, 1998, 2004 and 2010, ending the survey up with the sample size of 37,495 from around 23,000 households in wave 12 in 2014. RAND created many variables from the original HRS data for ease of use. I create my dataset and all the variables with a few exceptions mentioned below from the RAND HRS dataset version P. The details of the Rand HRS version P can be found in Bugliari et al. (2016).

As mentioned in the introduction, I define the disability health state to be one that qualifies one to be on the disability programs OASDI or SSI. The data on disability is self-reported. Later I plan to use the Social Security Administration’s matched administrative data on this variable and earnings variables not included here. The matched data will, however, reduce the sample size to half, as only 50 percent of the respondents are used for matching HRS with SSA Administrative data. The HRS data collected information on if and when the doctor diagnosed that the respondent has any of the severe diseases such as high blood pressure, diabetes, cancer, lung disease, heart attack, stroke, psychiatric disorder and severe arthritis. I drop respondents who received disability before the first survey year 1992 and I also drop the spouses in the sample who were not born between 1931 to 1941, that is the respondents in our sample are between age 51 to 61 and not disabled or dead in 1992. I ended up with the final sample size of 9,493 for this analysis.

Table 1 provides a few characteristics of the data.

| Survey Year | Alive:normal health | Alive: diseased | Became disabled | Died before disability | 65+: censored | Total |

|---|---|---|---|---|---|---|

| 1992 | 3026 | 6483 | 92 | 0 | 0 | 9601 |

| 1994 | 2591 | 6608 | 166 | 144 | 0 | 9509 |

| 1996 | 2291 | 6623 | 139 | 146 | 0 | 9199 |

| 1998 | 1726 | 5478 | 134 | 123 | 727 | 8188 |

| 2000 | 1279 | 4313 | 86 | 113 | 741 | 6532 |

| 2002 | 848 | 3148 | 54 | 58 | 759 | 4867 |

| 2004 | 468 | 1893 | 34 | 48 | 781 | 3224 |

| 2006 | 132 | 636 | 4 | 14 | 795 | 1581 |

3.1 Variables

The demographic variables White and Female have the standard definition. The variable College is a binary variable taking value 1 if the respondent has education level of college and above (does not include some college), i.e., has a college degree and more and taking value 0 otherwise.

cesd: I used a score on the Center for Epidemiologic Studies Depression (CESD) measure in various waves that is created by RAND release of the HRS data. RAND creates the score as the sum of five negative indicators minus two positive indicators. ``The negative indicators measure whether the Respondent experienced the following sentiments all or most of the time: depression, everything is an effort, sleep is restless, felt alone, felt sad, and could not get going. The positive indicators measure whether the Respondent felt happy and enjoyed life, all or most of the time.’’ I standardize this score by subtracting 4 and dividing 8 to the RAND measure. The wave 1 had different set of questions so it was not reported in RAND HRS. I imputed it to be the first non-missing future CESD score. In the paper, I refer the variable as cesd. Steffick (2000) discusses its validity as a measure of stress and depression.

cogtot: This variable is a measure of cognitive functioning. RAND combined the original HRS scores on cognitive function measure which includes “immediate and delayed word recall, the serial 7s test, counting backwards, naming tasks (e.g., date-naming), and vocabulary questions”. Three of the original HRS cognition summary indices—two indices of scores on 20 and 40 words recall and third is score on the mental status index which is sum of scores “from counting, naming, and vocabulary tasks” — are added together to create this variable. Again due to non-compatibility with the rest of the waves, the score in the first wave was not reported in the RAND HRS. I have imputed it by taking the first future non-missing value of this variable.

bmi: The variable body-mass-index (BMI) is the standard measure used in the medical field and HRS collected data on this for all individuals. If it is missing in 1992, I impute it with the first future non-missing value for the variable.

behav_smoke: This variable is constructed to be a binary variable taking value 1 if the respondent has reported yes to ever smoked question during any of the waves as reported in the RAND HRS data and then repeated the value for all the years.

behav_vigex: The RAND HRS has data on whether the respondent did vigorous exercise three or more days per week. I created this variable in each time period to take the value 1 if the respondent did vigorous exercise three or more days per week.

3.2 Statistical Results

| Duration | |||||||

|---|---|---|---|---|---|---|---|

| 0 | 1.0000 | 1.0000 | 0.0000 | 0.0000 | 0.0000 | 0.0000 | 0.0000 |

| 1 | 0.8711 | 1.0000 | 0.1289 | 0.0000 | 0.0000 | 0.0000 | 0.0000 |

| 2 | 0.7543 | 0.9767 | 0.2367 | 0.0062 | 0.0127 | 0.0027 | 0.0106 |

| 3 | 0.6570 | 0.9430 | 0.3105 | 0.0201 | 0.0292 | 0.0123 | 0.0279 |

| 4 | 0.5786 | 0.9082 | 0.3651 | 0.0331 | 0.0477 | 0.0232 | 0.0441 |

| 5 | 0.5126 | 0.8730 | 0.4087 | 0.0446 | 0.0659 | 0.0341 | 0.0610 |

| 6 | 0.4761 | 0.8421 | 0.4212 | 0.0562 | 0.0792 | 0.0465 | 0.0787 |

| 7 | 0.4667 | 0.8229 | 0.4116 | 0.0681 | 0.0896 | 0.0536 | 0.0874 |

With only demographic covariates (most that can be done with the Administrative data) the parameter estimates in Table 3 show that significantly lower risks of transitions ; ; for whites and and for women. This may entail that the genetic make-up of being white or female sex yield favorable genetic predisposition to have better health outcomes and longer life. This is a misleading inference as we will see next that when we control for epigenetic factors that the biomedical literature pointed out to have significant effects on aging process and health outcomes, the above effects disappear.

Table 4 shows the Cox regression coefficient estimates of the effects of various factors on the risk of having transitions from health status to health status .

These estimates show that the parameter estimates for the demographic covariates in Table 3 are biased as they are capturing the effects of excluded epigenetic and behavioral factors in that model. After controlling for these epigenetic and behavioral factors, the significance of those effects disappear. Furthermore, women show significantly lower probability of transition from diseased health states onto the disability health state.

Most important factors in Table 4 are cesd, measuring depression and stress and college graduation or higher level of education, with positive effect on all transitions with the exception of no effect on transition from normal health to death.

Other important factors are smoking, with significant adverse effect on transitions, and exercising three or more times regularly has significant favorable effect on most transitions.

| 1->2 | 1->3 | 1->4 | 2->3 | 2->4 | |

|---|---|---|---|---|---|

| White | -0.028 | -0.328 | -0.716 ** | -0.387 *** | -0.426 *** |

| (0.067) | (0.230) | (0.265) | (0.102) | (0.102) | |

| Female | 0.052 | -0.216 | -0.522 * | -0.029 | -0.493 *** |

| (0.050) | (0.190) | (0.251) | (0.092) | (0.093) | |

| #obs | 3583 | 3695 | 3652 | 6856 | 6855 |

| #events | 1602 | 112 | 69 | 476 | 475 |

| R squared | 0.000 | 0.001 | 0.003 | 0.002 | 0.006 |

| logLik | -12671.44 | -854.58 | -518.80 | -4038.09 | -3994.91 |

| AIC | 25346.88 | 1713.15 | 1041.60 | 8080.17 | 7993.81 |

| *** p < 0.001; ** p < 0.01; * p < 0.05. | |||||

| 1->2 | 1->3 | 1->4 | 2->3 | 2->4 | |

|---|---|---|---|---|---|

| cesd | 0.561 *** | 1.980 *** | 0.126 | 1.295 *** | 0.547 * |

| (0.120) | (0.352) | (1.109) | (0.155) | (0.256) | |

| bmi | 0.042 *** | -0.005 | 0.012 | 0.025 ** | -0.021 |

| (0.006) | (0.025) | (0.050) | (0.008) | (0.014) | |

| cogtot | -0.003 | -0.067 ** | 0.015 | -0.035 *** | -0.009 |

| (0.006) | (0.022) | (0.048) | (0.010) | (0.015) | |

| behav_smoke | 0.045 | 0.258 | 2.511 * | 0.381 *** | 0.817 *** |

| (0.054) | (0.226) | (1.028) | (0.108) | (0.180) | |

| behav_vigex | -0.197 ** | -1.000 *** | -1.169 * | -0.610 *** | -1.199 *** |

| (0.075) | (0.235) | (0.485) | (0.102) | (0.145) | |

| White | 0.045 | 0.098 | -0.494 | -0.117 | -0.325 * |

| (0.072) | (0.269) | (0.498) | (0.112) | (0.165) | |

| College | -0.111 | -0.887 * | -1.114 | -0.684 *** | -0.744 ** |

| (0.069) | (0.406) | (0.760) | (0.195) | (0.262) | |

| Female | 0.085 | -0.273 | -0.686 | -0.080 | -0.252 |

| (0.055) | (0.221) | (0.470) | (0.101) | (0.146) | |

| #obs | 3239 | 3334 | 3262 | 6165 | 5926 |

| #events | 1500 | 95 | 23 | 446 | 207 |

| R squared | 0.029 | 0.025 | 0.008 | 0.037 | 0.022 |

| logLik | -11647.65 | -671.06 | -151.05 | -3626.20 | -1626.01 |

| AIC | 23311.31 | 1358.12 | 318.10 | 7268.41 | 3268.01 |

| *** p < 0.001; ** p < 0.01; * p < 0.05. | |||||

4 Long-short memory (LSTM) recurrent neural network (RNN) model of health outcomes process

I first give an overview of the neural network framework. I then briefly review a selected few papers that used the neural network framework to estimate and predict the time-to-event probabilities in a single event set-up. The main focus of the papers, including this paper, is to relax the proportionality and linearity assumption of the statistical survival models, see Equation 7 for the proportionality assumption. I then explain why a long-short memory (LSTM) recurrent neural network (RNN) framework is more appropriate for predictive models of time-to-event probabilities in sequential set-up. I describe the c-index criterion that I use to compare the performance of various models and compare the performance of the multistate statistical model and a feed-forward neural network model of competing risk model by Lee et al. (2018) with the LSTM-RNN model of this paper. I code these three models using Keras and Tensorflow 2.0 modules in Python and estimate them using the Health and Retirement Survey data mentioned above. In the following section, I report the findings.

4.1 The basics of neural network

I first give an overview of the neural network framework. I then briefly review a selected few papers that used the neural network framework to estimate and predict the time-to-event probabilities in a single event set-up. The main focus of the papers, including this paper, is to relax the proportionality and linearity assumption of the statistical survival models, see Equation 7 for the proportionality assumption. I then explain why a long-short memory (LSTM) recurrent neural network (RNN) framework is more appropriate for predictive models of time-to-event probabilities in sequential set-up. I describe the c-index criterion that I use to compare the performance of various models and compare the performance of the multistate statistical model and a feed-forward neural network model of competing risk model by Lee et al. (2018) with the LSTM-RNN model of this paper. I code these three models using Keras and Tensorflow 2.0 modules in Python and estimate them using the Health and Retirement Survey data mentioned above. In the following section, I report the findings.

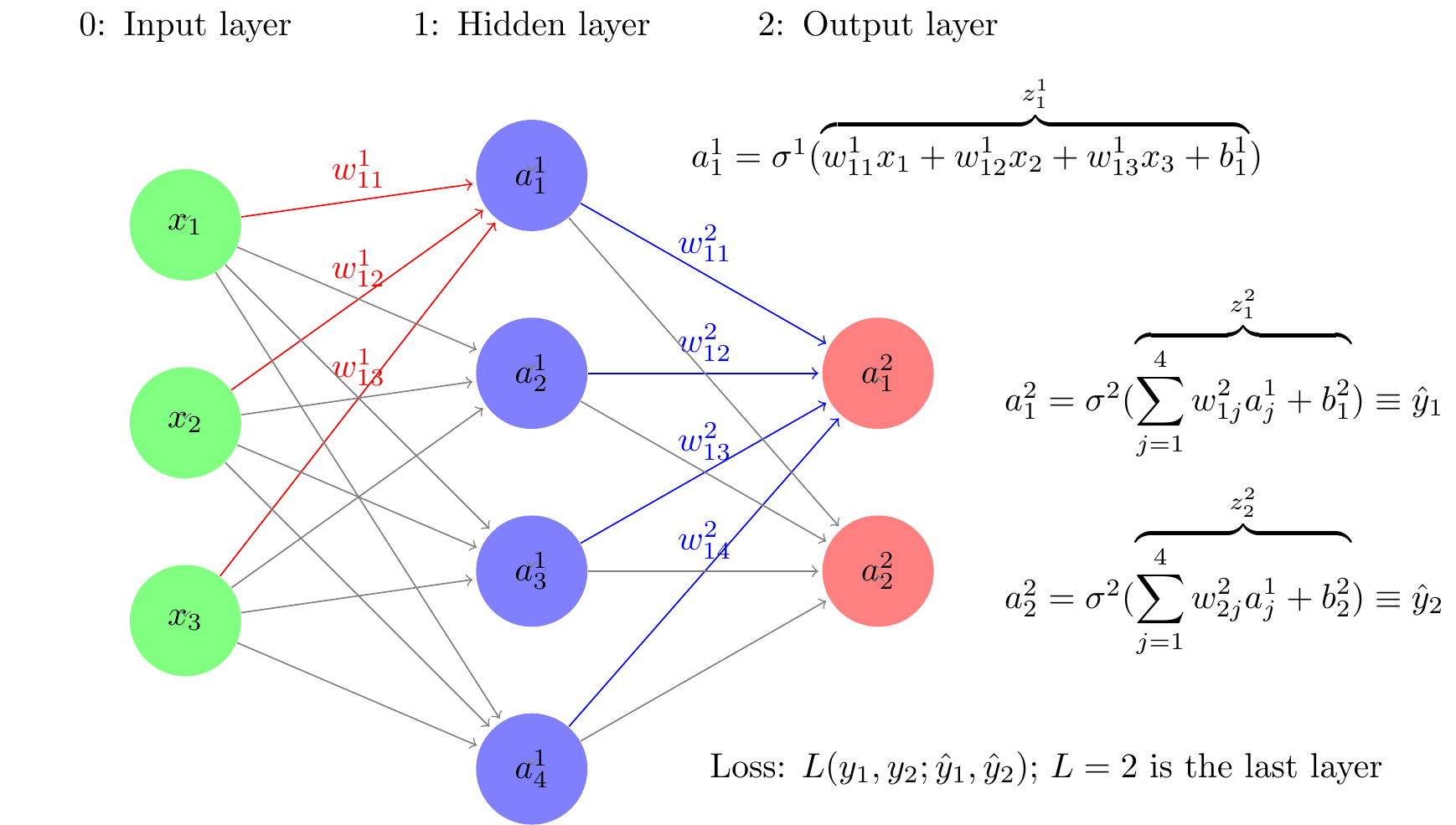

Neural network is a highly parameterized universal function approximator of the form , is a set of inputs, and is a vector of parameters. This is of the same nature as a statistical model. More precisely, suppose we have data on a set of individuals of the type , where x is a vector of individual characteristics, and y is a vector of output levels and is a set of parameters. The output could be a categorical variable for classification problems, it could be a probability distribution over finite classes, as in our case, or it could be a continuous variable for regression problems. The data generating process for as a function of x, is not known. The goal is to approximate that unknown data generating function. This is the problem that both statistics and neural network find computational solutions to. In neural network, the problem is to design a neural network architecture of the approximating function and find a suitable learning algorithm to learn the parameter values of the network using a training set of examples. This trained network can then be used to predict for an individual given his characteristics .

The popularity and wide applicability of neural network lies in the fact that it designs the approximator in a hierarchy of functions, joined together by compositions of functions, that renders good properties in terms of ease of computation and closeness of function approximation. Most neural network models have the following type of hierarchical functional form:

Each function corresponds to a layer of artificial neurons. The role of each neuron is to perform simple calculations and then pass on that to the next layer of neurons.

Neurons in each layer get signals which are the outputs of the neurons of the previous layer (also known as activation levels) that it is connected with. It sums them, I denote this sum with and apply an activation function to produce an output also known as activation level which I denote by . The activation level will then be passed on as an input to a neuron that it is connected to in the next layer. The neurons of the last layer will compute the output level taking the activation levels of the connected neurons of the previous layer.

Consider a simple neural network architecture depicted in Figure 3. It has three layers — layer 0: input layer, layer 1: hidden layer, and layer 2: output layer. Last layer in the text is denoted by , and hence . Layer has three input neurons. The second layer has 4 neurons. and last layer has two neurons corresponding to the two output levels, in our case probability of two events. In this neural network, the hierarchical function specification is of the form:

The function at each layer is a linear aggregator. In the notation, is a vector of functions, each component of which corresponds to a neuron of the ith layer. The function is a squashing function of the same dimension as , each component having the same function real valued function of one variable, known as activation function, which squashes the value of to a range such as for a sigmoid activation function, or for the activation function. The value of the activation function, is known as the activation level of the neurons of the ith layer. The activation levels of the 0th layer, , the inputs, fed to the neural network from outside. The operation on the right is also performed component-wise for each neuron at the ith layer it computes the weighted sum of the activation levels (outputs) of the neurons of the previous layer that the neuron of the ith layer is connected to. The weights used are specific to the neuron of the ith layer. An activation function which generally taken to be same for all the neurons of the ith layer) is applied to this aggregated value . These activation functions do not have any unknown parameters that need to be estimated. These two computations—aggregation and activation—are shown as a composite mapping for the neurons of the ith layer.

There are different types of neural network, depending on the functional form of in Equation 13. A deep feed forward neural network, also known as a feedforward neural network with hidden layers or as a multilayer perceptron (MLP) is a network architecture in which there is no feedback of any neurons to itself or to others in the same layer. The activation levels of the neurons only feed forward to the neurons in the next layer. This is the reason also why these type of neural networks are called feed forward, as opposed to the recurrent neural network consider later that allow feedback. The MLP has good computational properties and an MLP is a great universal functional approximator: It is shown Hornik, Stinchcombe, and White (1989) that with a sufficient number of layers in a hidden layer can approximate a function to any level of precision desired. So a MLP can be used to approximate the true data generating process as closely as one wants. How does one find such a network, i.e., how does one choose the weights of the network.

To get a good approximation, the artificial neural network contains hundreds of thousands of deep parameters , how does one train the network, i.e., how to learn the the parameter values. The learning is done by choosing the weights to minimize a loss function together with a nonnegative regularization term (in statistical term a regulerization term corresponds to shrinkage estimator).

In the present context of learning about a probability distribution of time-to-events, an appropriate loss function is to take the negative log-likelihood of the sample and the additive regularization term to be . In this case the loss function with regularization term has a Bayesian interpretation—it is the posterior log-likelihood with normal prior distribution for the parameters . The choice of to minimize the loss is done by a gradient descent method. The neural network architecture Equation 13 yields a very convenient fast and automatic computation of the gradients using an algorithm known as back-propagation algorithm, used pretty much in all types of neural networks. Two steps in this algorithm are assume an initial value of the weights , first compute all the activation levels starting at the input layer, i.e., forward through the layers in Equation 13, i.e., go through layer superscripts forward. In the next step, compute the gradients of the weight parameters of various layers, starting from the last layer, backward in layers, i.e., decreasing order in the subscripts in Equation 13. Once all gradients are computed, weights are adjusted using a steepest descent algorithm. Further details are omitted, since I do not implement any type of back propagation algorithm in this paper.

4.2 Previous neural network models of survival analysis

The main objective of the neural network approach to survival analysis is the relaxation of restrictive proportional hazard assumption in Equation 7. The following papers use a MLP architecture to estimate the survival probabilities with covariates fixed at the beginning of the study period. (Faraggi and Simon 1995; Biganzoli et al. 1998; Fernandez, Rivera, and Teh 2016; Katzman et al. 2018; Lee et al. 2018; Ranganath et al. 2016; Ren et al. 2019; Zhao et al. 2019). Except for Lee et al. (2018) who consider a competing risk model, all other papers consider two-state, alive-death, models.

Two main limitations of the previous literature is that they use feed-forward neural network architecture to specify the functional approximator for the hazard function in Equation 7. These are static model in the sense that the input vectors of an individual are given at the begin of the period and the neural network model predict the probabilities of the event for a fixed number of periods. This is problematic because prediction of probabilities at a given period use all the information from the future periods. It cannot handle training using data in which some individuals do not have information for all periods. More importantly, these models cannot incorporate new information that may come after training the model to update the time-to-event prediction probabilities. Another shortcoming of these models is that they are restricted to analyzing life histories of only one transition between two health states—from alive to death or at the most to various causes of death in the language of survival analysis. As I have mentioned in the introduction, to better predict the probabilities of time to certain health come of interest, it is important to analyze dynamic paths through other intermediary health outcomes and the factors that affect the dynamics of those paths. I argue that a long-short memory recurrent neural network is more appropriate model to that end. I explain this framework next.

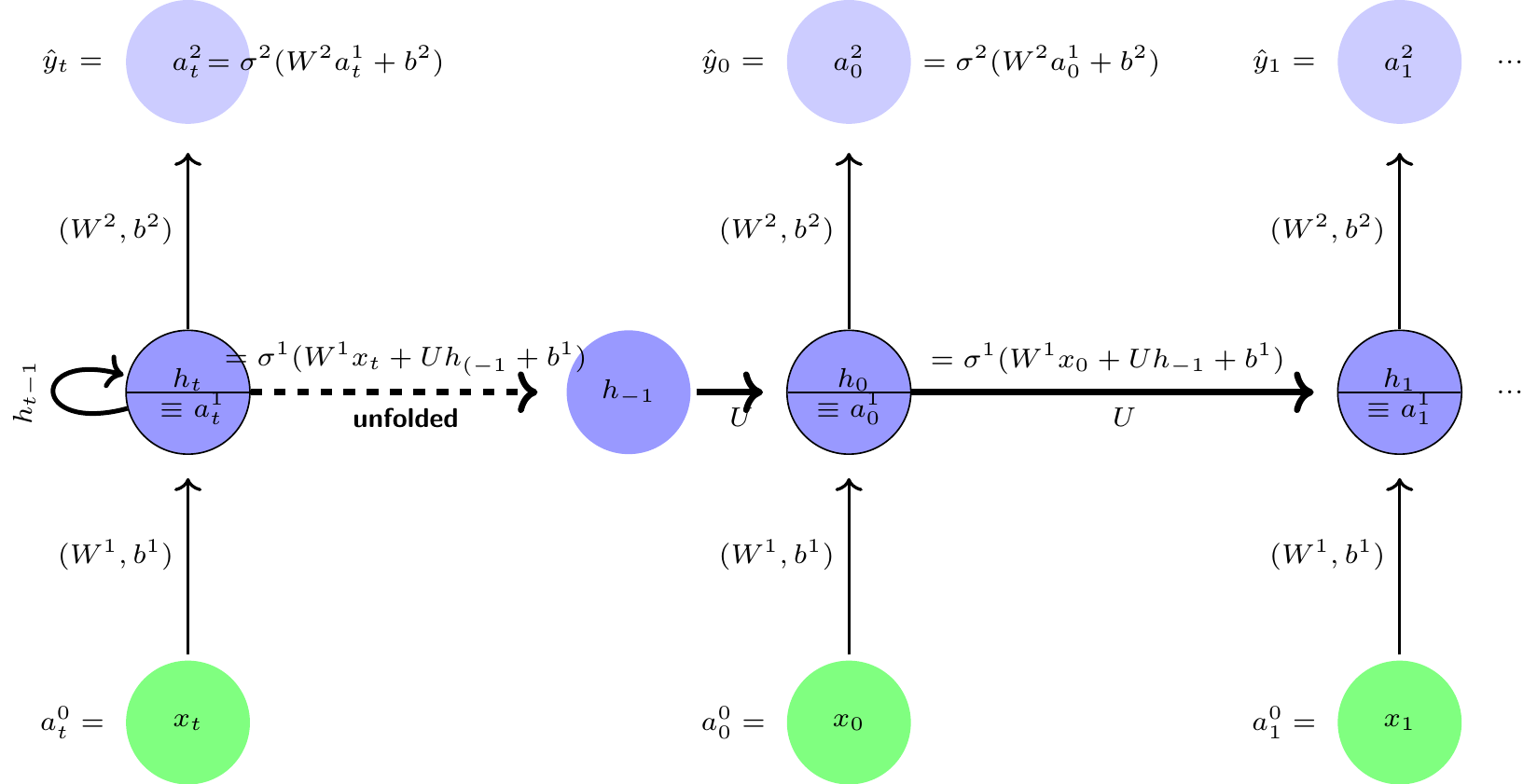

4.3 Formulation within Recurrent Neural Network with LSTM

Let be a set of measurements, or characteristics and , a set of health outcomes are measured repeatedly over time . A recurrent neural network (RNN) allows a feedback link among neurons in a hidden layer. A typical RNN is shown in the left panel and the unfolded version in the right panel of Figure 4. This neural network is a simple three layer architecture similar to MLP architecture shown earlier, with one main difference is that the hidden layer has a feedback connection linked to itself, i.e., a cyclical or recurrent connection and there is weight sharing, i.e., the weights of all the layers for a given time period is constant for all time periods. The recurrent connections in the RNN architecture effectively creates an internal memory of the effects of previous inputs that can affect the current and future outcomes. It is also interesting to note that the functional forms of the RNN are similar to Kalman filtering models, but more general in some respects and restrictive in some other respects.

The hidden layer 1: The activation levels of the hidden recurrent layer at time-step is produced by combining activation levels of the previous period’s hidden recurrent layer and input levels and then applying the activation function as follows: For Layer 1 (hidden recurrent layer):

Layer 2 (Output):

The pseudo computational graph of the network is shown in Figure 4.

It may seem a minor difference between the architectures of an MLP and an RNN, but difference in their capabilities are very important and crucial for sequential learning. For instance, an RNN can map the entire history of previous inputs to each output as compared to an MLP which can map only current input to output vectors. Similar to the result that an MLP is an universal function approximator mentioned earlier, with a sufficiently large number of hidden self-connected neurons, an RNN can approximate extremely closely any sequence-to-sequence mapping, see (Graves, Wayne, and Danihelka 2014; Hammer 2000; Siegelmann and Sontag 1992). There are various types of RNN, for details see (Graves 2012; Lipton, Berkowitz, and Elkan 2015). In many situations, especially in drug-discovery, the study samples may have small size, an important extension of an RNN framework that handles this problem is (Altae-Tran 2016; Altae-Tran et al. 2017).

The computation of the gradients is done using a modification of the back propagation method known as back propagation through time, which involves multiplication of gradients less than one many times over the time steps tending to vanishing gradient problem, meaning the gradient of the weights representing memories of the far back in time tend to zero or vanish. A similar problem arises when the gradients larger than 1 are multiplied many times leading to exploding gradient problem.

Quite a few other fixes are proposed for the vanishing and exploding gradient problems of RNN models, such clipping of gradients, and various other types of extensions such as Elman (1990) are proposed. But the long-short memory extension of the RNN framework by Hochreiter and Schmidhuber (1997) proved to be very useful and successful in many applications which I use for this paper.

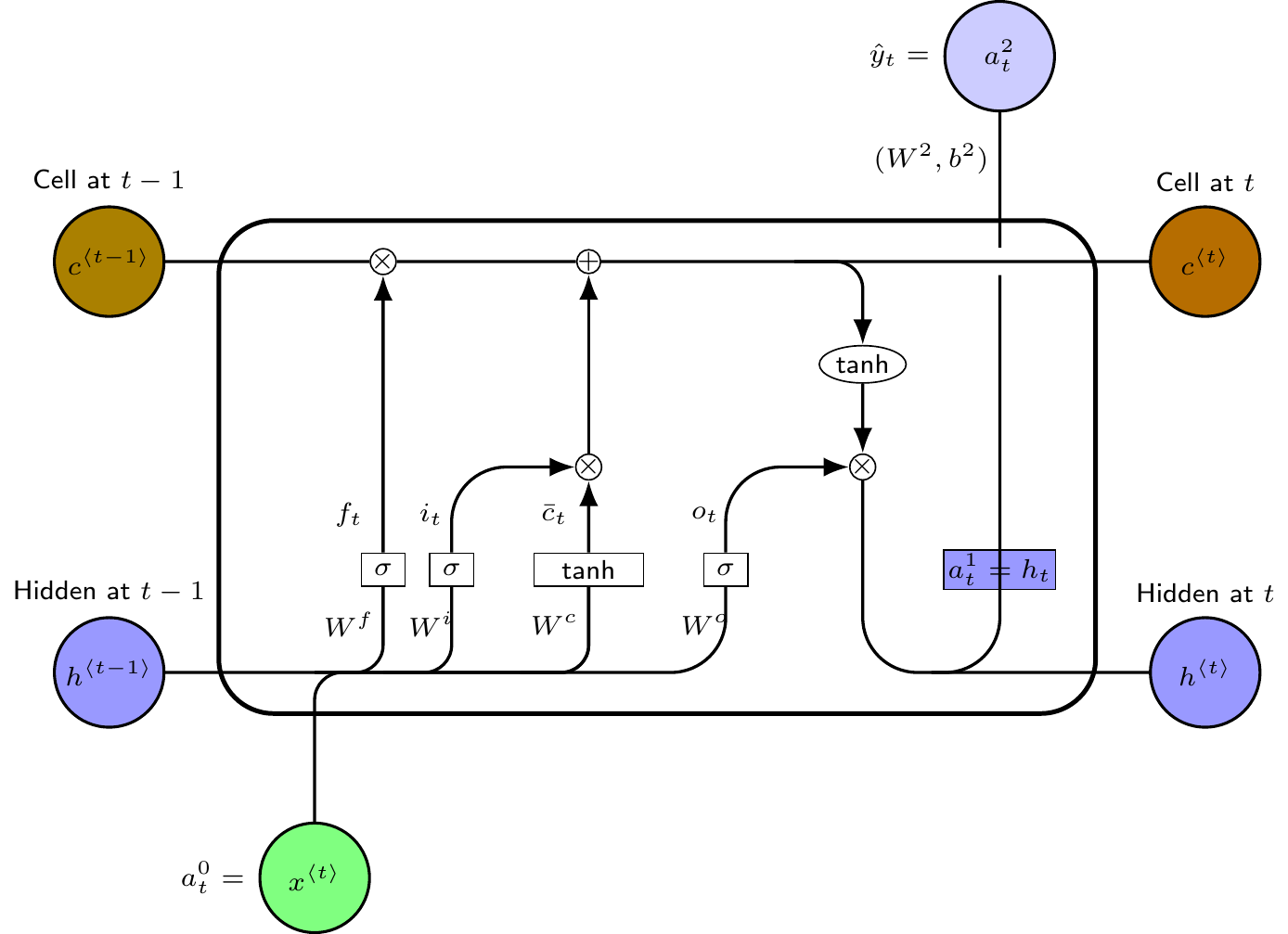

4.4 LSTM memory cell

The main innovation in this approach is to replace the hidden recurrent cells in an RNN with a memory cell with three gates—an input gate, an output gate and a forget gate—and a recurrent cell memory together with the original hidden layer memory cell. The functional form of a typical LSTM memory cell is as follows

Layer 1 (Hidden layer with recurrent neurons):

Layer 2 (Output): This is same as the RNN output layer. The pseudo computational graph of the network is shown in Figure 5.

5 Prediction and performance of the model

As a multistate time to event model has censored data, the standard metrics such as , root mean square error are not applicable for performance measurement. Harrell et al. (1982) introduce the measure of concordance index, known as c-index, extending the concept of the area under the ROC curve, which was further refined by Antolini, Boracchi, and Biganzoli (2005) to be applicable for survival models with time-varying covariates. Most machine learning models use this criteion to compare the performance of various neural network models of survival analysis.

To get the basic idea behind the c-index, suppose two individual in the dataset are in a particular health state, both of whom are at risk for exits to another possible health state. Suppose the first individual exits at time and the second individual did not exit at time or earlier. The first individual’s estimated survival probability at time should be smaller than the second individual who did not exit. A good model should have this maintained for most or all such comparable pairs. The c-index measures the proportion people for whom this is true out of all the people who could be compared.

I use the c-index criterion to discriminate the performance of various models in predicting time-to-event probabilities. The c-index estimates for three three models are shown in the last row of Table 5. Judging from the c-index estimates, it appears that neural network models do better job in prediction of time to event probabilities than Aalen-Johansen estimators of the multistate statistical model. Furthermore, the LSTM-RNN model of this paper does perform slightly better.

| Statistical multistate model | Lee etal Deephit model | LSTM-RNN model | ||||

| T | Disability | Death | Disability | Death | Disability | Death |

|---|---|---|---|---|---|---|

| 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.001 | 0.000 |

| 1.000 | 0.027 | 0.000 | 0.035 | 0.000 | 0.017 | 0.017 |

| 2.000 | 0.064 | 0.000 | 0.052 | 0.004 | 0.032 | 0.045 |

| 3.000 | 0.109 | 0.035 | 0.120 | 0.026 | 0.044 | 0.080 |

| 4.000 | 0.152 | 0.088 | 0.247 | 0.050 | 0.055 | 0.122 |

| 5.000 | 0.179 | 0.141 | 0.271 | 0.133 | 0.063 | 0.167 |

| 6.000 | 0.199 | 0.166 | 0.287 | 0.155 | 0.070 | 0.208 |

| 7.000 | 0.206 | 0.197 | 0.496 | 0.504 | 0.077 | 0.248 |

| c-index | 0.476 | 0.748 | 0.756 | |||

I also show in Table 5 the average of the predicted time to event probabilities of the individuals in the test data set, and in Figure 6, I plotted the average probabilities together with the confidence intervals. Looking at the variability in the graph, and direct comparison of probabilities for the individual cases, it appears that neural network models might be over-fitting the data, in spite of using the regularization. More investigation is needed.

So I based my comparison of the models using the c-index.

6 Conclusion

Individuals follow different health trajectories over their lives. Many factors along a health trajectory up to a given time period affect their health state at the time and the future paths that the individual will follows. Such sequential health developments conditioned by the health related behaviors along the path determine the risks of diseases, disability and death. This paper studies statistical and artificial neural network models for predicting time to an event of interest—disability—with death as a competing risk event during the mid ages and how different factors along the health trajectory affect these probabilities. The paper uses the Health and Retirement Study data for estimation of the models. The paper discusses the merits and demerits of these two approaches and compare their performances.

The paper formulates the life histories of individuals as a stochastic process through a finite set of health states. The main problem is to estimate the transition probabilities between health states and the effects of various time varying covariates on transition probabilities. The effect of covariates is parameterized through “transition intensity functions” in both statistical and neural network models. Statistical multistate models assume that in each transition, the covariates linearly affect a latent health measure and that latent health measure shifts a baseline hazard function (or transition intensity function) proportionately up and down by a multiplicative factor. This type of specification of transition intensities is known as Cox proportional hazard model. In many situations, including the present, this proportionality assumption imposes strong structure and could be far from the structure of the true data generating process. The multi layer perceptron (MLP), a kind of deep neural network (explained in the paper), is known to be an excellent `universal function approximator’. Utilizing this insight, recently a few papers use MLP models to relax the proportionality assumption. This paper argues that the prediction of progression through health states and estimation of the probabilities of health events of interest such as disability and death are better done in a recurrent neural network (RNN) model with long-short memory cells to capture the effects of long lagged health related behaviors and outcomes that affect the current and future health outcomes. The paper compares the performances of a statistical multistate model, and an MLP based competing risk model of Lee et al. (2018) and the LSTM-RNN model proposed in this paper. The paper finds that performance, measured by c-index, is much better for both the neural network models and is slightly better for the LSTM-RNN model. Since an LSTM-RNN neural model is more suitable for prediction in a sequential set-up, the findings prescribe that it is better to use an LSTM-RNN type of neural network model to estimate and predict transition probabilities than an MLP based neural network model.

For all three models, the paper included some epigenetic factors (that include health related behaviors), demographic factors, education level, some biomarkers like BMI, CESD and cognition and depression and stress level—time varying for the LSTM-RNN and multi-state statistical model and time fixed at the first period for the Lee et al. (2018) model. At present there are no neural network software package that can estimate and compare the relative importance of the covariates on transition probabilities. The statistical models are good at that. More research in neural network along this line will be useful.

From the estimates of the statistical model, the paper finds that college graduates have significantly lower probability of all the transitions. The variable CESD measuring the level of depression and stress has significant positive effects on transiting from normal health to diseased health state, from normal health to becoming disabled and from diseased health state to become disabled or to death. The other most significant behavioral variables are smoking and sufficiently vigorous level of regular exercising. The smoking has significantly adverse effects and exercising has favorable effects on most transitions.

For unfamiliar readers, the paper gives introduction to estimation of multistate stochastic models and the MLP and LSTM-RNN type of neural networks models when the data has censored observations.

While a statistical model is good for studying the effects of various covariates on time to event probabilities, judging from the performance based on the c-index, its performance as a predictive model is much worse than the two neural network models considered. Relaxation of the proportionality assumption to include more general specifications is a useful direction. For neural network models, it will be very useful to study the relative strength and statistical significance of various covariates on the outcomes such as transition probabilities through different health states that the models predict.

Appendix

MLP learning algorithm

The sets of neurons at layer are corresponding the three inputs, , i.e. hidden units, and at the output layer corresponding to outputs. Let , i.e., the number of neurons in the k-th layer of the network, for .

First compute all neuron outputs using the feedforward iteration: , with initial condition (input vector).

To calculate , and , denote by and , and calculate first all , , using the backpropagation iteration: , with the initial condition , where denotes the Haddamard product, i.e., element wise product.

Now compute and , . In matrix notation, and .

References

Footnotes

See for instance, Raut (2017) for a competing risk analysis in a similar context using the SSA Administrative data and compare that with the present framework.↩︎

From definition of transition intensities above and writing it in matrix form, we have . From this it follows that for small , we have .↩︎

For more formal treatment of product integral see Gill and Johansen (1990) and for a lucid exposition with some applications, see Gill (2005).↩︎